The launch of the model of R1 from the DeepSeek, a start-up, then a little-known chinese-manufactured, it has shaken the sector of artificial intelligence and much more. Leaving aside for a moment the big questions surrounding the project, the cost of the actual training to your use of the potential of the data from the OpenAI, a man asks himself what is so special about the chatbotin the balenës of the blue, and why the leaders of the great technology we are komplimentojnë the company.

The answers are many, including the technical ones. But let's do it step by step.

Thinking outside the box, and optimizing

DeepSeek is a chinese company founded in the year 2023, from Liang Wenfeng, co-founder of a number of large investment in the High-Flyer. Wenfeng is always protected and a different approach to the research of IT, with the aim to employ men of letters of young people from the universities, to the best of People.". Moreover, the export controls imposed by the United States, does not allow for a startup experience as well as DeepSeek have access to it officially in the technologies of the most recent, as well as the GPU of the highest level to be used for the global market, but most of the products produced specifically for the purpose.

To train a model for the competition of the HE, and the chinese should be the lot of the inventor and the think out of the scheme.

As a result, the Wenfeng want to be surrounded by young people, and professionals outside of the IT sector and in a manner that, to his knowledge, in addition to the many.

It is thanks to this team, to be added to it, taking the value for the notification DeepSeek, the model is R1 asked for just a 2,048 GPU NVIDIA H800 to be trained, taken two months ago and spent a little less than 6 million, a figure which is much lower than the competitors directly to us. It is important to note that these data have been questioned by experts in various IT, but there's an element of, which leaves little room for doubt: interesting findings in the areas of training, and the reasoning are described in the technical documentation.

In a paper published on the arXiv on the 22nd of January, 2025, in fact, the concepts of innovation, for IT has been applied in more concrete terms, including, an alternative way to train a model the artificial intelligence that in fact exceed the techniques are now encouraging the escalation of the conclusions in the time to do the type of reasoning t1 of OpenAI. .

Let's try to simplify the concept, as far as possible, an LLM, that is, a model for the language on a larger scale, trained in three phases.

The first one is the pre-training is required to be given to HIM for a large amount of text on them and make a model to learn general knowledge. This is the stage where HE's learning to predict the next word in a sentence, or a fine, land to the other. As we have already mentioned in these pages are, in fact, IT selects the word to the other, on the basis of probability. However, the training in advance is not sufficient for a model to be in a position to respond in a manner consistent contributions to the human.

This includes the game, the repair of the monitor. Here it begins, in fact, post-training, that is, the use of technology to make the model more efficient and improve their answers.

It's a phrase is essential to the refined model of IT, and in order to do that and should be able to “understand” the needs of the users through an application, such as the labeling of the data, and the training for a specific task.

However, what is needed is a stage, and the third to complete the process of the training: learning, strengthening training, further, the pattern by taking the feedback from the real people (RLHF, Reinforcement learning from Human Feedback), and the patterns and the RLAIF, the Strengthening of the learning from that Feedback. ).

At the end of these three stages, the operation is the training of an LLM end, though, it may be necessary operations and the further he.

The following are the main stages in which the training is structured today, the company, the largest in the sector, HE said.

DeepSeek it has been demonstrated that it is possible to to be eliminated, at least in part, in the second stageranging from the training of the model R1-Zero.

After a phase of pre-training, instead of having to go through the second step, a team of young scholars of chinese used a technique of the new property in the field of learning, strengthening of the so-called The Group's Relative Policy Optimization (GRPO), thus overcoming the Optimization of the Policy Proksimale. (PPO).

The algorithm of the young man, who supports the ability of the high-level reasoning to model for HIM, rewritten in terms of the manner of treatment of the compensation and optimization, eliminating the need for a function to a value. Please note that none of the model, the nerves are not being used to generate each.

In other words, the GRPO it makes it much easier to train a LLM, to reduce the consumption of memory and, by making the training process much less expensive. Moreover, due to what is effectively a lesson in amplifier based on the rules and regulations, the concept can be extended to a more easy-even on a large scale.

DeepSeek was in a state of perfect to me next the techniques of his training, the model of the second, in addition to DeepSeek-R1-Zero, that is, the DeepSeek-R1.

An important aspect of the work it has to do with the concept of scaling in the time trial, which goes through the phases of classical, pre-training and post-training, with a focus on the capacity of the distribution of resources: on the site of the improvement of the parameters, this method is focused on the determination of the the power of the processing, is necessary to produce the answer.

The discovery of the scholars of the DeepSeek, in other words, opens the door to new methods of training, and HE, thus allowing the improvement of the skills of reasoning and the reduction of costs, an evolution in the direction of optimization, is currently being studied by the company of the great united states.

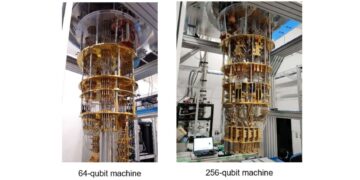

While the promotion of universal values it represents a step forward in efficiency, but this does not mean that the infrastructure of the score on the scale is not necessary to support the development of IT.

However, what is certain is that the DeepSeek has reminded us that the competition for IT is not developed only in the area of hardware, and the quantity, but also in the studies of the global optimization algorithms. Now the ball is in the area of the United States, and the answer of their own, of course, that is not going to delay a lot.

Discussion about this post